Visualizing your files by the file share or disk they reside in

Expermiental - Not all use cases may be covered and results are not guaranteed.

When dealing with large data volumes spread across multiple file shares or you are crawling many local disks, you may want to see that data broken down by share or drive.

Scenario

Your organization has many file shares:

\\server-us-1\alpha

\\server-us-2\beta

\\server-us-3\gamma

\\server-ca-west\alpha

\\server-ca-east\beta

\\server-ca-cent\gamma

After crawling these shares, you end up with an index of 100,000,000 files! Maybe that is expected, maybe it is a surprise. It may be helpful to have some understanding and discover which of these shares have the largest storage footprint. Or perhaps you would like to see which share has the most data to clean up? This will be possible by implementing the _shiny-root pipeline.

_shiny-root

_shiny-root is a configuration that is injected into the Analytics Engine (your index). Once this pipeline has been configured, future data that is crawled into the configured index will automatically have this pipeline enabled, you can set it and forget it.

Configuration

You will need access to the Analytics Engine’s Visualizer to enable this configuration. This will require no downtime or outages.

In your browser, navigate to the Visualizer (ex. http://localhost:5601)

On the left, click “Dev Tools”

Copy the following code-block and past it into the Dev Tools console. This command will put the _shiny-root processor in the Analytics Engine configuration. From there, it can be applied to any index you want. Click the “run” button to execute.

CODEPUT _ingest/pipeline/_shiny-root { "description" : "parse unc shares root and root drive letters", "processors": [ { "grok": { "field": "parent", "patterns": ["%{UNC_SHARE:uncShare}","%{UNC_DRIVE:drive}"], "pattern_definitions" : { "UNC_SHARE" : "^\\\\\\\\([a-zA-Z0-9 `~!@#$%^&()_+={\\[}\\]';.,-]+)\\\\([a-zA-Z0-9 `~!@#$%^&()_+={\\[}\\]';.,-]+)", "UNC_DRIVE":"\\w\\:\\\\" }, "ignore_failure" : true } } ] }The response should be

"acknowledged": trueCopy the following code-block and past it into the Dev Tools console. You will need to substitute

<indexname>with your index name or the name of an aggregate. Click the “run” button to execute. This command will tell the Analytics Engine to use _shiny-root as the default pipeline for your index. This will allow all newly indexed data to have the share/drive parsed and extracted from the 'parent' fieldCODEPUT <indexname>/_settings { "index.default_pipeline": "_shiny-root" }The response should be

"acknowledged": trueCopy the following code-block and past it into the Dev Tools console. You will need to substitute

<indexname>with your index name or the name of an aggregate. This command will update all items in the index with the _shiny-root pipeline, and start parsing and extracting the share/drive on existing files in the index.CODEPOST <indexname>/_update_by_query?slices=auto&wait_for_completion=false&conflicts=proceedThe response will be a task ID similar to this one:

CODE{ "task" : "GVDtWEI8Th-_rB-KmJfsvg:29544" }You can use that task ID to see when the process has been completed with the Dev Tools command

CODEGET _tasks/task:id EXAMPLE: GET _tasks/GVDtWEI8Th-_rB-KmJfsvg:29544Update your index patterns in the Visualizer by going to Managment > Index patterns > Select the index or aggregate you applied _shiny-root to> Click the refresh icon.

You will have at least 2 additional fields:uncShareuncShare.keyworddrivedrive.keyword

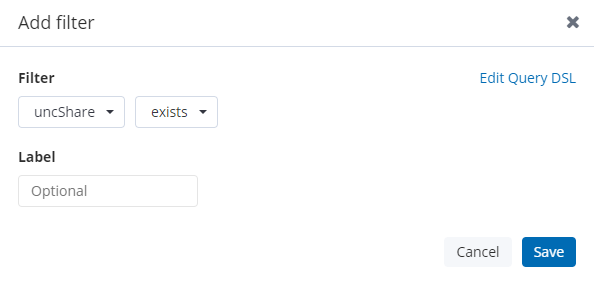

You will now have a field on indexed items from the index you configured (either uncShare or drive). You can use _exists_:uncShare in the search bar or a filter uncShare exists

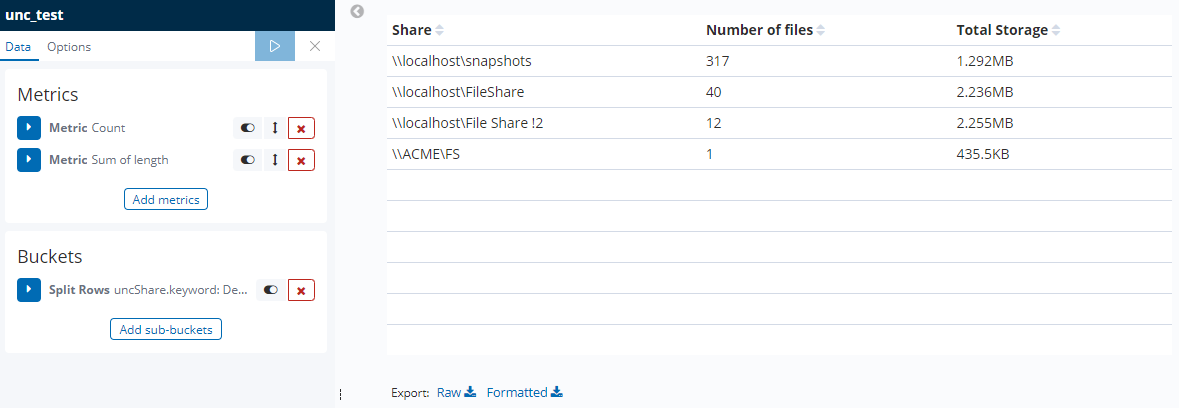

Here is an example of a data table visualization using uncShare.keyword. It allows you to visualize your files by the file share they reside in.

Uninstall _shiny-root

If you wish to uninstall this functionality, simply run the following command in the Visualizer’s dev tools:

DELETE _ingest/pipeline/_shiny-root